GPT as Cognitive Scaffold: Building Tools That Think With You

TL;DR

GPT serves as a **cognitive scaffold**, enhancing human thinking by structuring problem-solving processes. Users can leverage structured prompts, including JSON, to guide AI reasoning. This method improves clarity and helps avoid common pitfalls like hallucinations. By chaining outputs, GPT can simulate continuous thought processes, making it a powerful partner in complex tasks. As of 2025, the future of AI will likely include personalized cognitive assistants for tailored support.

Key Q&A

Question 1: What is a cognitive scaffold in education?

A cognitive scaffold breaks learning into manageable parts, providing support to help learners achieve goals they couldn’t reach alone.

Question 2: How can GPT function as a cognitive scaffold?

GPT can structure problem-solving, offload mental effort, and enhance reasoning by providing prompts and organizing thoughts.

Question 3: What is chain-of-thought prompting?

Chain-of-thought prompting encourages GPT to break down problems step-by-step, improving accuracy in reasoning tasks.

Question 4: How does JSON formatting help with GPT outputs?

JSON formatting enforces structured outputs, guiding GPT’s reasoning and making responses clearer and more organized.

Question 5: What is function calling in the OpenAI API?

Function calling allows users to define schemas, ensuring GPT outputs adhere to specified formats for consistency and reliability.

Question 6: How can GPT assist in product ideation?

GPT helps brainstorm by outlining assumptions, steps, critiques, and follow-up questions, aiding systematic exploration of ideas.

Question 7: What are the limitations of using GPT as a cognitive scaffold?

Limitations include potential hallucinations, context memory limits, rigid outputs, and the risk of over-reliance on AI for cognitive tasks.

Question 8: How can structured prompts improve AI interactions?

Structured prompts clarify expectations, leading to more focused and relevant responses from GPT, enhancing overall output quality.

Question 9: What future developments are anticipated for cognitive scaffolding in AI?

Future developments may include more personalized AI thought partners that adapt to individual user needs and workflows.

Question 10: How does GPT’s role as a cognitive scaffold change human-AI interaction?

GPT transforms from a mere tool to a collaborative partner, enhancing human creativity and problem-solving through structured support.

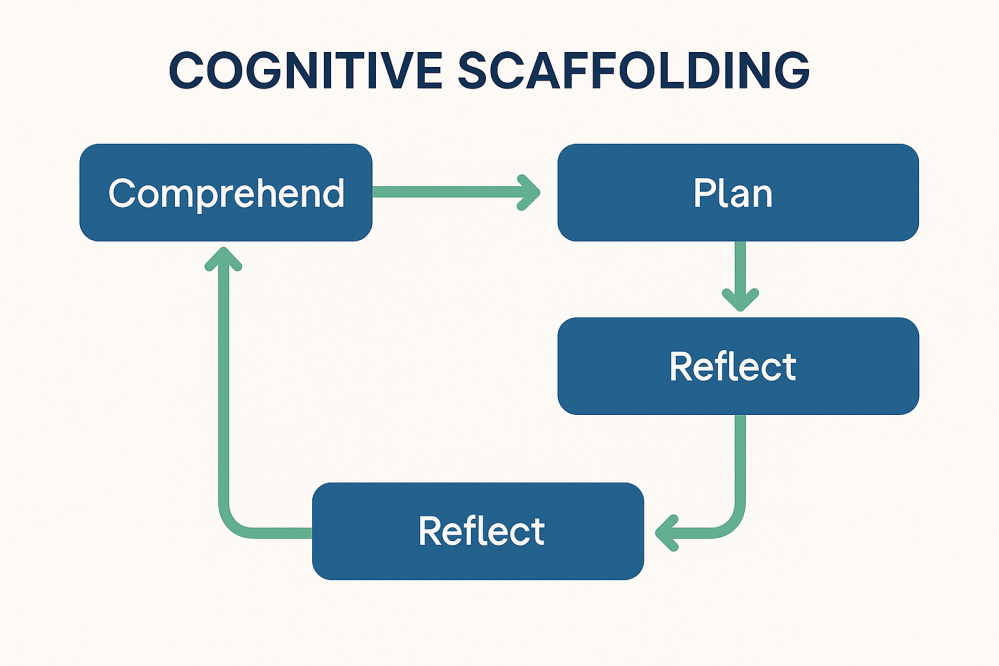

GPT can be more than a text generator – it can act as an extension of our own thought process. In the image above, a friendly robot works at a laptop surrounded by JSON snippets. This hints at a powerful idea: with the right structure, AI can help organize and support our thinking. Instead of treating GPT as a magic eight-ball that spits out answers, we can use it as a cognitive scaffold – a tool that holds up our ideas, helps shape our reasoning, and collaborates with us in solving problems. In this post, we’ll explore how GPT-4 and similar models can augment human thinking when we build the right scaffolds around them. We’ll draw from education and cognitive science, get hands-on with structured prompts (even some JSON and code), and peek into the future of “tools that think with you.” So grab a coffee, and let’s dive in!

What Is a “Cognitive Scaffold,” Anyway?

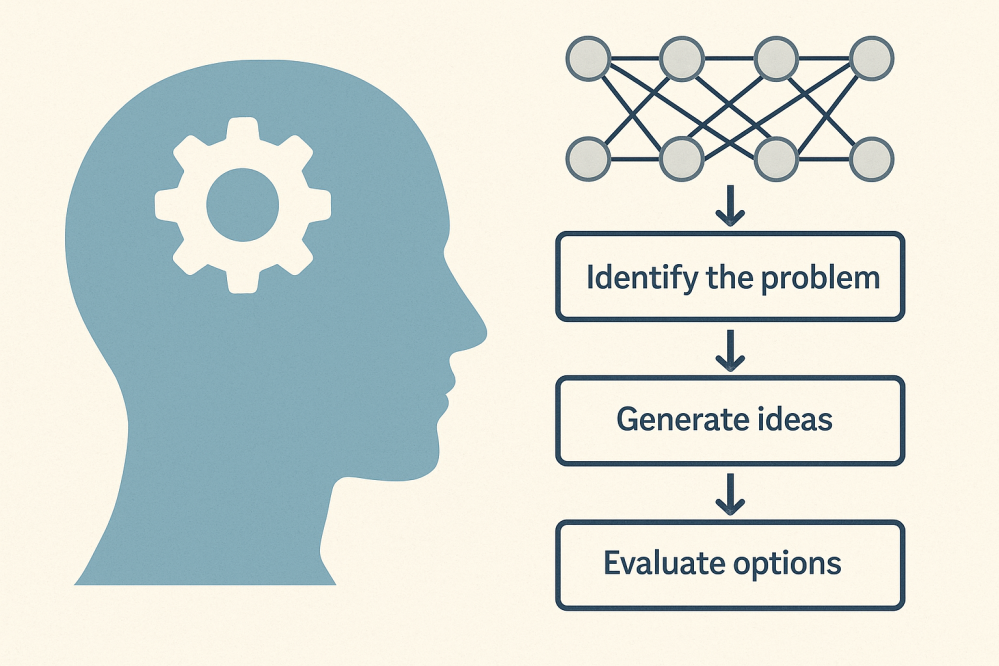

In educational psychology, scaffolding refers to breaking up learning into manageable chunks and giving a tool or structure to support each chunk [edutopia.org]. For example, a teacher might first introduce key vocabulary, then an outline, then a step-by-step guide, instead of just saying “Write an essay from scratch.” The scaffold provides temporary support, helping the learner achieve what they couldn’t on their own – a concept rooted in Vygotsky’s idea of the Zone of Proximal Development (the gap between what you can do alone and what you can do with guidance [edutopia.org]). Eventually, the supports can be removed as the learner grows more capable.

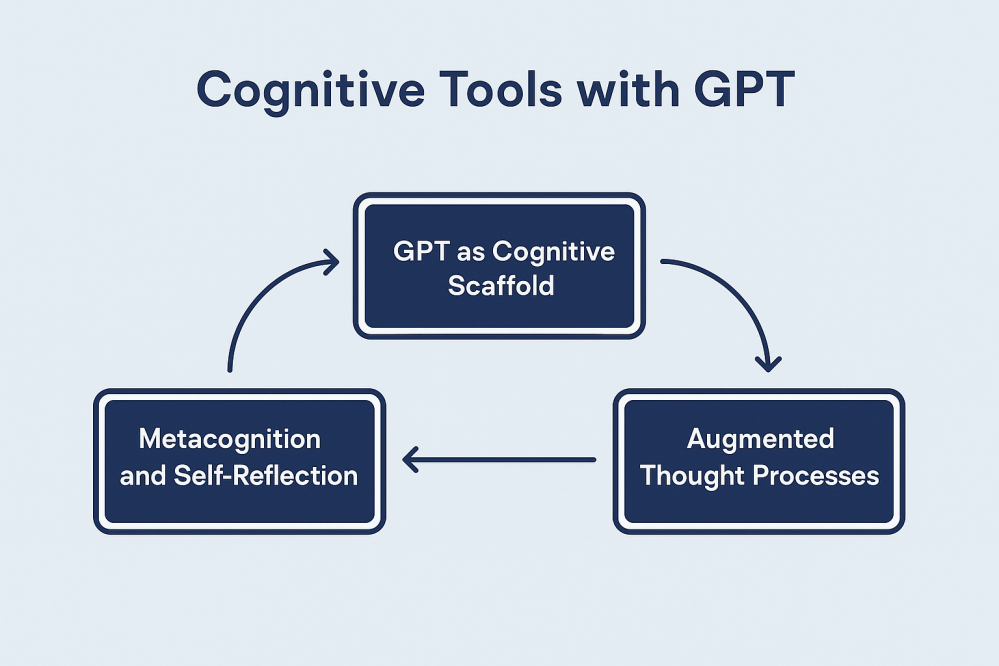

GPT as a cognitive scaffold means using the AI in a similar supportive role: structuring our problem-solving process, offloading mental effort, and augmenting our capabilities. Think of how we use pen and paper to sketch ideas or how talking to a rubber duck can clarify a programmer’s thoughts. GPT can serve that function – but with a lot more knowledge and interactivity. It can ask us questions, summarize our ideas, critique our reasoning, and suggest next steps. As one commentator put it, AI (especially large language models) is becoming “a cognitive scaffold, augmenting and influencing the ways we process, interpret, and synthesize ideas” [psychologytoday.com]. The key is that we remain in charge of the thinking process, but the AI provides structure and prompts that elevate our thinking to a new level.

GPT’s Reactive Nature (and Why Structure Helps)

If you’ve used ChatGPT or similar models, you know they’re incredibly fluent and knowledgeable – but also highly reactive. By default, an LLM waits for your prompt, then generates a response in one go. It doesn’t plan multiple steps ahead on its own, and it has no innate “self-correction” mechanism beyond what’s in the prompt. Ask a complex question, and it will attempt an answer immediately, often by pulling in relevant facts and patterns it learned during training. This reactive pattern can sometimes give the illusion of reasoning, but the model may actually be skipping important thinking steps or making unwarranted assumptions, simply because you didn’t explicitly ask it to show its work.

Researchers have argued that autoregressive LLMs (like GPT-4) “cannot, by themselves, do planning or self-verification” – they’re basically giant next-word predictors [arxiv.org]. The model will smoothly present an answer that sounds plausible, even if it hasn’t truly checked all the details. This is why we see things like hallucinations (confidently stated false information) or reasoning errors in complex problems. The AI isn’t dumb; it’s just doing exactly what we ask: giving a continuation of the prompt. If the prompt doesn’t demand structured thinking or verification, the default behavior is a single-shot response that “merely sounds correct.”

The good news is that we, as users or developers, can change this behavior by changing how we prompt. A major insight from the past couple of years is that structured prompting leads to better reasoning. For instance, simply telling the model “Let’s think step by step” often prompts it to break down a problem and reason more carefully, dramatically improving accuracy on math and logic tasks [ibm.com]. This is known as chain-of-thought (CoT) prompting, and it works because we’re nudging the AI to mimic the step-by-step reasoning that a human might do internally. Instead of jumping straight to an answer, the model enumerates intermediate steps or thoughts. Techniques like CoT and prompt chaining (feeding the model multiple sequential prompts, each building on the last) guide GPT to reason through a problem methodically rather than blurting out an answer that “merely sounds correct” [ibm.com]. In short, if we want the model to think, we have to prompt it in a way that makes the thinking explicit.

Using JSON to Simulate Structured Thinking

One surprisingly effective way to enforce structured output (and thus structured thinking) is to use JSON formatting in our prompts and outputs. I discovered this trick while trying to get consistent, parseable answers from GPT for programming tasks, but it turns out to be a general purpose superpower. GPT models have been trained on a lot of JSON and code, so they’re very comfortable producing and following JSON schemas [artsen.h3x.xyz]. In fact, the web is full of JSON data (almost half of pages contain JSON-LD structured data snippets [artsen.h3x.xyz]), so the model “feels at home” when you ask for a JSON-formatted response. But JSON isn’t just for data interchange – we can use it to structure the cognitive process of the AI.

How does this work? Essentially, we define a JSON template for the kind of thinking steps we want, and ask GPT to fill it in. By giving the model an empty scaffold to complete, we’re guiding its reasoning. It’s like giving a student a worksheet with sections to fill out: it clarifies what’s expected. The curly braces, keys, and arrays act as signposts for different parts of the task. GPT will follow the pattern diligently because it’s great at mimicking structures – “give it something to copy” and it will oblige.

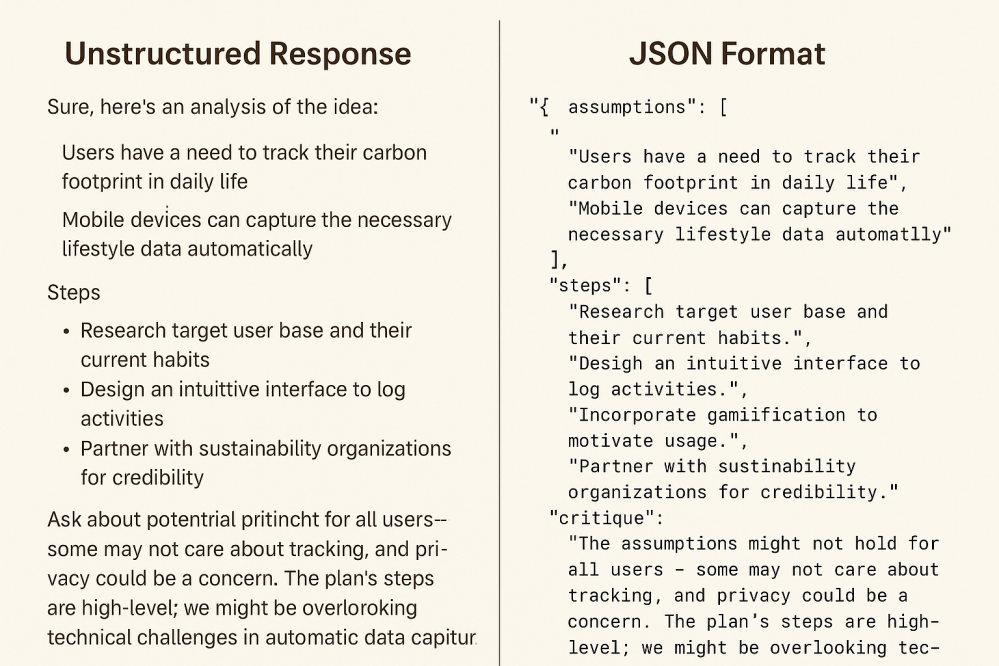

Let’s make this concrete. Suppose I’m brainstorming a new product idea and I want GPT’s help in a structured way. I might prompt it with a request like: “Analyze this idea and return a JSON with four fields: assumptions, steps, critique, and next_prompt. Under assumptions, list any assumptions or premises. Under steps, outline a plan or thought process. Under critique, be a critical friend and point out weaknesses or risks. Finally, under next_prompt, suggest what I should ask or explore next.” The model will then produce something like this:

json

{

"assumptions": [

"Users have a need to track their carbon footprint in daily life",

"Mobile devices can capture the necessary lifestyle data automatically"

],

"steps": [

"Research target user base and their current habits",

"Design an intuitive interface to log activities",

"Incorporate gamification to motivate usage",

"Partner with sustainability organizations for credibility"

],

"critique": "The assumptions might not hold for all users – some may not care about tracking, and privacy could be a concern. The plan’s steps are high-level; we might be overlooking technical challenges in automatic data capture.",

"next_prompt": "Ask about potential privacy issues and data sources for automatic carbon tracking."

}

Look at what we got: GPT didn’t just answer with a single block of text or a loose list of ideas – it organized the response into clear sections. We see the assumptions it’s making, a series of steps or ideas to pursue, a critique of the idea (catching issues I might have missed), and a suggestion for what to ask next. In a sense, we’ve gotten GPT to simulate an internal dialogue and externalize it in a structured form. The JSON keys are acting like headings for different mental stages: context, planning, self-critique, and follow-up.

This approach works so well because JSON is unambiguous and forces clarity. I’m literally telling the AI, “Put your answer here, your reasoning there, your doubts here, etc.” There’s no room for it to wander off into a rambling essay – the scaffold keeps it on task. I’ve found that using JSON schemas in the system or user prompt (as a sort of guide) makes GPT’s outputs much more predictable and easier to work with. It’s a bit like Mad Libs for AI reasoning: you give it the blanks, it fills them in. And as a bonus, the result is machine-readable (great if you’re coding something on top of GPT) and easy to scan for a human.

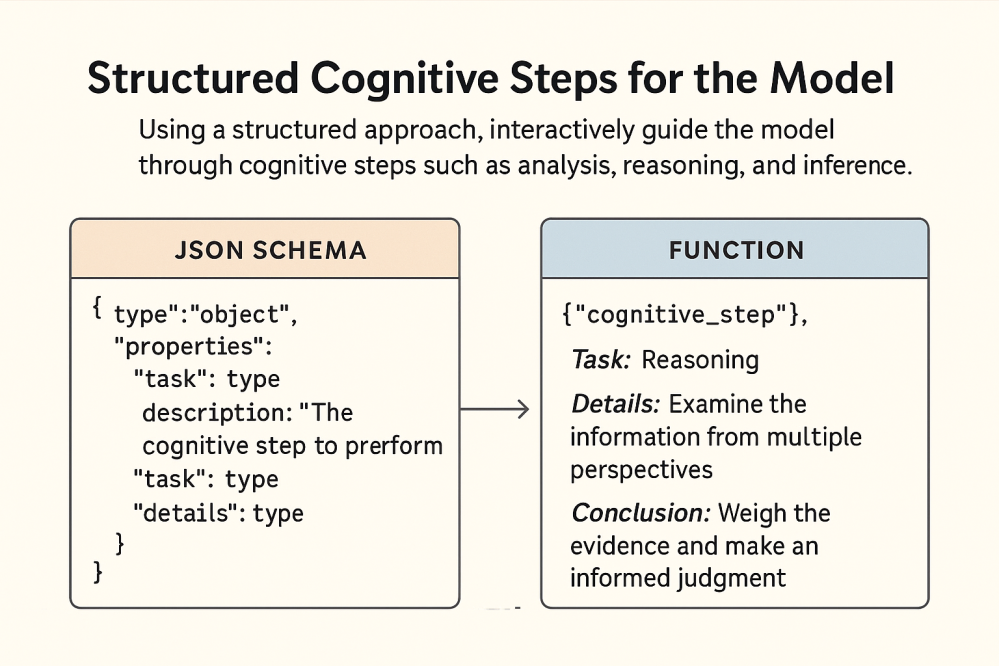

Function Calling: Adding an API for Thought

What if we could guarantee that GPT sticks to this structured format? Manually writing JSON schemas into prompts is useful, but it relies on the model’s compliance and can sometimes go awry if the model decides to be extra verbose. Enter OpenAI’s function calling feature. This is a recently introduced capability (in the API) that lets you define a “function” with a schema – essentially a specification of expected fields and types – and have the model output arguments for that function. Under the hood, it’s using JSON, but now the model is constrained: it must produce output that matches the schema exactly, or it knows the output won’t be accepted. Think of it as formally locking in the scaffold.

With function calling, we define something like this for our scaffold:

json

{

"name": "cognitive_scaffold",

"description": "Structure the assistant's thinking into explicit parts.",

"parameters": {

"type": "object",

"properties": {

"assumptions": {

"type": "array",

"items": { "type": "string" },

"description": "Underlying assumptions or beliefs relevant to the query"

},

"steps": {

"type": "array",

"items": { "type": "string" },

"description": "Step-by-step reasoning or plan to address the query"

},

"critique": {

"type": "string",

"description": "A critical evaluation of the plan or possible issues"

},

"next_prompt": {

"type": "string",

"description": "A suggested follow-up question to continue the dialogue"

}

},

"required": ["assumptions", "steps", "critique", "next_prompt"]

}

}

When we enable this function in the OpenAI API and ask a question, the model isn’t just free-form chatting anymore – it’s effectively filling out this JSON as a form. The API will return a structured object with those four fields populated (or an error if it doesn’t conform). OpenAI built this because developers were already trying to get models to output consistent JSON for integration, albeit in hacky ways. As they noted when announcing the feature, “Developers have long been working around the limitations of LLMs in this area via … prompting and retrying requests repeatedly to ensure that model outputs match the formats needed” [openai.com]. Function calling is the answer to that: it constrains GPT to a format and “trains the model to better understand complicated schemas” [openai.com]. In our case, the complicated schema is not just for data interchange – it’s for disciplined thinking.

The beauty of using function calling to enforce a cognitive scaffold is reliability. You can trust that the model will give you, say, exactly 5 steps in an array if that’s what the schema asks for. No more, no less, no apology paragraphs or flowery intros – just the structured content. In practice, I’ve found that GPT-4 is quite capable of filling in such a schema with insightful content. It’s like having an AI consultant who always delivers their report in the same well-organized format. (If only human consultants were so consistent!)

Chaining Thoughts: Tools Thinking Together

Once you have the model thinking in structured chunks, a natural next step is to chain these outputs together. Imagine you get the JSON output above with a next_prompt suggestion. You can take that next_prompt string (e.g. “Ask about potential privacy issues…”) and feed it right back to the model as the new query, again expecting a cognitive_scaffold function output. In effect, GPT is guiding its own subsequent queries, recursively. This kind of loop can simulate an ongoing thought process or iterative planning session.

For example, I could automate a simple loop like:

python

query = "Brainstorm a new app to help people reduce their carbon footprint."

for round in range(3):

result = GPT.call(query, functions=[cognitive_scaffold])

data = result["arguments"] # the JSON output from the function

print(f"Round {round+1} critique: {data['critique']}")

query = data["next_prompt"]

In round 1, the model might list assumptions about the app idea, steps to build it, critique the idea (e.g. “users might not stick with it long-term”), and then suggest a next question (e.g. “How can we make it engaging long-term?”). In round 2, it will take that question about engagement and scaffold a new reasoning process (assumptions about user engagement, steps to gamify, critique those, and a new follow-up prompt). Round 3 would continue the pattern. What’s happening is a multi-turn dialogue, but entirely run by the model with a structured self-reflection at each step. We’ve essentially turned GPT into a planner that can call itself, examine its ideas, and then decide what to think about next.

This kind of recursive or reflective loop is very reminiscent of techniques being explored in advanced AI agent frameworks. You might have heard of things like AutoGPT or other “AI agents” that attempt to let GPT generate tasks for itself and complete them in sequence. Those can be hit-or-miss (often hilariously so), but the core concept is powerful: with the right scaffolding, GPT can iterate on a problem, not just answer it one-off. By chaining function calls or prompt-response cycles, we get closer to how a human analyst might work: propose a plan, critique it, refine the plan, dive deeper, and so on. We’re basically giving GPT a way to do what cognitive scientists call “metacognition” – it’s thinking about its own thoughts (in a limited, structured way).

It’s important to note we’re still in control here. We can (and should) review each iteration’s output and decide when to stop or which direction to pursue. The AI might suggest an irrelevant next question or go down a rabbit hole – and we can intervene, just like a supervisor guiding a junior analyst. The scaffold doesn’t make GPT infallible; it just makes its process more transparent and manipulable.

Use Cases: How Cognitive Scaffolding Helps in Practice

All this talk of scaffolds and structured prompts is abstract until we see it in action solving real problems. In my own experience, this approach shines in a few domains:

- ✨ Product Ideation and Brainstorming: As illustrated above, GPT can help break a big idea into assumptions and steps, then critique itself. When I’m fleshing out a concept for a app or feature, I’ll have GPT act as a planning partner. It will list out the foundational assumptions (making me aware of what needs validation), outline a rough plan or components, and even throw darts at the idea to see if it holds water. This is incredibly useful for system designers or entrepreneurs who want to explore an idea space systematically. The AI might surface considerations you overlooked (e.g. “Have you considered the privacy implications?”) early in the process. It’s like having a very diligent brainstorm buddy who never forgets to ask the obvious questions.

- 📝 Academic Writing and Research: When writing an article or paper, I use GPT’s scaffolded output to organize my thoughts. For example, I’ll prompt it to generate an outline with key arguments (

steps) and counterarguments (critique). The assumptions section might include my starting premises or the context I’m taking for granted. This has a twofold benefit: (1) it forces me to clarify the structure of my argument before diving into prose, and (2) the critique portion acts as a devil’s advocate, highlighting weak points or gaps in my logic. I’ve found this especially helpful in literature reviews – GPT can list assumptions behind a line of research, enumerate the steps of each argument or experiment, then critique the methodology, all in a tidy format. It doesn’t replace my own analysis, but it absolutely jump-starts and supports it. In essence, GPT becomes a writing coach or research assistant, structuring the thinking process that underlies the writing. - 🤖 Prompt Engineering and AI Debugging: Here’s a meta use: using GPT to help improve GPT. When I create complex prompts or chains for an AI system, I’ll often ask GPT (in scaffold mode) to analyze my approach. For instance, I might share the current prompt and the observed output, and have it fill in a JSON with

assumptions(what it thinks I expect the model knows),steps(how the model is interpreting the prompt),critique(why the output might be going wrong), andnext_prompt(how I could refine the instruction). This structured reflection often reveals miscommunications between me and the model. Maybe the AI assumed something incorrectly, or a step in reasoning was skipped. By having GPT spell it out, I can debug my prompt strategy. It’s a bit like the model is explaining itself or another model – extremely useful when you’re developing prompts for complex tasks or chaining multiple AI calls. For developers and researchers, this can save a ton of trial-and-error when fine-tuning your AI interactions.

These are just a few examples, but really any complex, open-ended task can benefit from a bit of scaffolding. Planning an event, analyzing a business strategy, learning a new programming concept – the pattern is the same. You prompt GPT to structure the task into parts, and suddenly the path forward looks clearer (or the holes in your understanding become visible). It’s all about making the implicit explicit.

Limitations: The Scaffold Is Not the House

Before we get too carried away: using GPT as a cognitive scaffold is not a magic wand that fixes all AI issues. It introduces its own challenges and you still have to watch out for the usual suspects:

- Hallucinations Haven’t Vanished: Even in a structured format, GPT can confidently output false or misleading content. It might list an assumption that sounds plausible but is just plain wrong, or cite steps that aren’t the most efficient. The scaffold makes it easier to spot these (since the info is categorized), but it doesn’t eliminate them. Always verify critical facts or steps independently – the AI might be thinking with you, but it can still lead you astray if you don’t maintain a critical eye.

- Context and Memory Limits: Complex scaffolding often means multi-turn interactions or big JSON outputs. Remember that GPT-4 has a context window – if you have too many iterations or an especially large structured response, you risk hitting those limits. The model might start forgetting earlier assumptions it itself made if the conversation goes long. Chaining function calls amplifies this: by round 5, does it remember what it assumed in round 1? You may need to recap or feed important points back into the prompt to keep the AI on track. In the future, longer context or external memory tools might help, but for now, there’s a practical limit to how much scaffolding you can stack before the whole thing wobbles.

- Verbose or Rigid Outputs: Sometimes, enforcing a structure can make the model a bit too rigid or verbose. For example, it might always produce a critique even if it has to invent a fairly trivial one, just to fill that field. Or every answer starts to look formulaic (“Assumptions: … Steps: …”). This isn’t inherently bad – consistency is the goal – but it can feel stilted. You might need to tweak the schema or allow some flexibility (maybe make a field optional, or occasionally turn off the scaffold to let the model be creative) depending on the task. There’s a balance between guiding the model and not boxing it in so much that it loses the creative spark or natural tone.

- Over-reliance on the AI: This is more of a human issue. If we lean on GPT for every little cognitive task, there’s a risk we don’t stretch our own mental muscles. The scaffold is meant to assist, not replace, our thinking. I treat it like a very smart calculator for thoughts – great for augmenting my capabilities, but I still want to understand the problem myself. Used properly, GPT as a scaffold amplifies human intellect; used blindly, it could make us intellectually lazy. The goal is a partnership, not handover of responsibility.

Building the Future: Personal GPTs and Thought Partners

Even with those caveats, it’s hard not to be excited about where this is heading. We’re essentially witnessing the emergence of personal AI thought partners. Today, with a bit of prompt engineering and the latest APIs, you can jury-rig a GPT-4 to act as a research assistant, a brainstorming buddy, or a tutor. Tomorrow, this might be far more seamless. I imagine a future where each of us has a personal GPT (on our device or in the cloud) that knows our contexts, our projects, maybe even our personal knowledge base – and it constantly provides cognitive scaffolding tailored to us. It could be like an extension of our mind that’s always available: helping us remember things, organize our ideas, challenge our assumptions, and learn new concepts, all in a very natural dialogue. Think Jarvis from Iron Man, but focused less on executing commands and more on thinking with you.

On the research and development front, the concept of cognitive agents or multi-step AI reasoning is gaining steam. We will see more systems that chain multiple AI models or calls, where one model’s output feeds another’s input in complex loops. The scaffolding we do manually today might become an automated feature – models might internally carry a chain-of-thought that they don’t always show, but you can query it or ask for an explanation (some early versions of this exist; researchers sometimes retrieve the model’s hidden “thoughts” to see why it answered a certain way). OpenAI’s function calling and tools are one step in this direction: giving models the ability to not just respond, but to act, plan, and use other tools in a structured manner. It’s not hard to imagine more sophisticated toolkits that let developers script an AI’s thought process at a higher level, mixing logic and neural intuition. In a way, we might be heading toward AI that comes with built-in scaffolding – guardrails and thought patterns that make them more reliable out-of-the-box.

For us end-users and creators, the challenge and opportunity will be personalization and control. A scaffold is most useful when it fits the task and the person. The way I break down a problem might differ from how you would. Ideally, you should be able to tweak your AI’s “thinking style” to match your own workflow – a kind of custom cognitive profile. We’re already doing this informally via prompt engineering, but I can imagine user-friendly interfaces where you essentially say, “Hey, AI, when I’m solving coding problems, first help me outline the approach, then let’s write pseudocode, then catch any mistakes. But when I’m doing creative writing, let’s use a different scaffold: set the scene, develop characters, etc.” Your AI could switch modes, a scaffold for every occasion.

The concept of GPT as a cognitive scaffold flips the narrative of AI as a replacement for human thinking. Instead, AI becomes a thinking aid, a partner. We don’t ask “What will the AI come up with?” in isolation; we ask “What can we come up with together, with the AI’s help?” In my experience, this mindset is both liberating and empowering. It reduces the intimidation factor of big problems (because you have a structured approach at hand) and it keeps you, the human, in the loop – making the judgments, providing the creativity, and setting the goals.

As we continue building tools that think with us, not just for us, we tap into the best of both worlds: human judgment and creativity, combined with machine speed and breadth of knowledge. It feels like we’re architecting a new form of cognition – one that’s distributed between person and AI. And if we design it right, with good scaffolds and shared control, it might just help us tackle problems that neither could solve alone.