Advanced Techniques for Transformer Interpretability

In recent years, researchers have developed numerous methods to peer inside transformer models and understand how they work. Building on the concept of layer-wise sub-model interpretability – treating each layer or component as an interpretable sub-model – this report delves into advanced techniques that enhance model transparency. We examine theoretical foundations, such as saliency maps, attention analysis, causal interventions, neuron-level studies, mechanistic interpretability (circuits), and probing. We then explore practical applications: fine-tuning models for interpretability, ensuring AI safety through transparency, modular experimentation, and aligning models with human values. Each section is grounded in current research findings, with references to key papers.

Theoretical Aspects

Saliency Maps and Feature Attribution

Saliency and feature attribution methods explain a model’s prediction by assigning importance scores to input features (e.g. words in a sentence). Integrated Gradients (IG), SHAP, and LIME are prominent examples:

- Integrated Gradients (IG) computes the integral of gradients along a path from a baseline input to the actual input, producing an attribution score for each feature (The Explainability of Transformers: Current Status and Directions) (The Explainability of Transformers: Current Status and Directions). It satisfies certain axioms of fairness in attribution (The Explainability of Transformers: Current Status and Directions) and was introduced by Sundararajan et al., 2017 (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers).

- SHAP (SHapley Additive exPlanations) assigns importance by connecting feature contributions to Shapley values from game theory. It unifies ideas from earlier methods (like LIME) and ensures additive feature importances that sum to the model’s output difference (The Explainability of Transformers: Current Status and Directions).

- LIME (Local Interpretable Model-agnostic Explanations) fits a simple surrogate (like a linear model) in the neighborhood of the input by perturbing inputs and observing changes in the output (The Explainability of Transformers: Current Status and Directions). The surrogate’s weights then serve as feature attributions.

These methods have been applied to transformer-based NLP models to highlight which words most influence a prediction (for example, highlighting important tokens in a text classification) (The Explainability of Transformers: Current Status and Directions) (The Explainability of Transformers: Current Status and Directions). However, a major challenge is faithfulness – whether the highlighted features truly caused the model’s output. Studies have shown that different attribution techniques often disagree on what the “important” tokens are, casting doubt on their reliability (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers) (The Explainability of Transformers: Current Status and Directions). For instance, Jacovi and Goldberg (2020) and Neely et al. (2022) found that attributions from methods like IG vs. LIME can diverge significantly (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers). Such inconsistencies mean saliency maps should be interpreted with caution. Recent research emphasizes benchmarking attribution methods for transformers and checking their concordance (agreement) and faithfulness to the model’s actual decision process (The Explainability of Transformers: Current Status and Directions). In summary, saliency maps provide an intuitive visualization of feature importances and remain a useful tool, but they should ideally be complemented with additional analyses to ensure we are explaining the model rather than artifacts of the explanation method (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers).

Attention Visualization

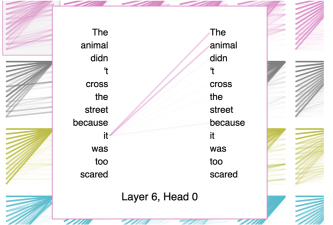

One of the transformer’s core innovations is the self-attention mechanism, which produces attention weights indicating how strongly each token attends to others. Visualizing these raw attention maps (e.g. as heatmaps or directed graphs between words) can sometimes elucidate patterns – for example, showing that pronouns often attend to their antecedent nouns. Attention visualization tools like BertViz have revealed that certain heads focus on specific relationships (e.g. attending from “it” to “the animal” in a sentence) (Explainable AI: Visualizing Attention in Transformers). Yet, there has been debate on whether attention weights are meaningful explanations of model decisions.

(Explainable AI: Visualizing Attention in Transformers) Visualization of an attention head linking the pronoun “it” to “the animal” in a transformer (GPT-2). By examining attention patterns, we see the model strongly connects “it” with “the animal” (pink lines), indicating how the model resolves the pronoun reference (Explainable AI: Visualizing Attention in Transformers).

Researchers Jain and Wallace (2019) showed that you can often alter attention weights without changing a model’s output, implying that “attention is not explanation.” In other words, an attention map might highlight certain words, but those weights alone don’t reliably tell us why the model made a prediction (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL). Serrano and Smith (2019) found that even when they zeroed-out (ablated) high attention connections, the model’s output sometimes remained unchanged – suggesting attention distributions can be misleading as causal explanations.

To address these limitations, more robust interpretability techniques have been proposed on top of raw attention:

- Attention Rollout and Attention Flow: Abnar and Zuidema (2020) introduced methods to aggregate attention across multiple layers ([2005.00928] Quantifying Attention Flow in Transformers). Rather than looking at a single layer’s weights, attention rollout computes an overall influence of one token on another by multiplying through the attention graphs layer by layer. This helps track how information flows through the network. They showed that such aggregated attention correlates better with true importance (as measured by input ablation tests and gradients) than raw attention from a single layer ([2005.00928] Quantifying Attention Flow in Transformers). Essentially, attention rollout provides a higher-level visualization of which input tokens ultimately have the most effect on the output, accounting for the multi-layer transformations.

- Attention-weighted Gradients: Other works combine attention with gradients, e.g. weighting each token’s gradient by its attention score, to filter out attention heads that aren’t influential. These hybrid methods attempt to identify which attended features most affect the loss or output.

- Integrated Attention and LRP: Extensions of layer-wise relevance propagation (LRP) to transformers propagate relevance not just through neurons but also through attention structures (The Explainability of Transformers: Current Status and Directions). This can attribute output importance back through the attention layers, providing a view of which key-query interactions were most responsible.

Overall, attention visualizations are a powerful tool to inspect transformer internals and have yielded insights (like discovering heads that track coreference or syntax (Explainable AI: Visualizing Attention in Transformers)). But raw attention alone has clear limitations in interpretability. The consensus emerging from recent research is that attention patterns need to be interpreted in context (e.g. combined with gradients or analyzed across layers) to understand why the model attends to those features ([2005.00928] Quantifying Attention Flow in Transformers). Enhanced attention visualization techniques aim to bridge that gap, making attention more faithful to the model’s true reasoning by tracing how attention contributes to outcomes rather than treating it as an explanation by itself.

Causal Interventions

To truly understand causality inside a model – i.e. what components or neurons cause certain outputs – we turn to intervention-based analysis. Causal intervention techniques actively perturb or control parts of the model and observe the effects, allowing us to make stronger claims than passive observation. Key approaches include ablation, activation patching, and counterfactual analysis:

- Feature Ablation: This involves zeroing out or removing certain components (such as an attention head or a neuron activation) and seeing how the model’s output changes. If ablating a component significantly alters the prediction, it suggests that component was important (causally implicated) for that output. For example, in BERT one might ablate a specific attention head to see if the model can still resolve a pronoun correctly; a large drop in performance would indicate that head was crucial for pronoun resolution.

- Activation Patching (Swap Tests): Also known as causal trace or interchange interventions, this method replaces intermediate activations in a model for one input with those from another input to see if a specific behavior transfers (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL) (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL). For instance, suppose we have two sentences: one where the model makes a correct prediction and one where it makes a mistake. By “patching” (inserting) the activations from the correct example into the model processing the faulty example (at a specific layer), we can test if that layer contains information that would fix the mistake (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL). If after patching layer L the model’s output becomes correct, that suggests layer L (or some subset of its neurons) encodes the necessary information for the correct prediction. Researchers have used this to pinpoint which attention heads or MLP outputs carry certain facts or grammatical cues (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL) (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL). Notably, recent work identified attention heads in GPT-2 that are “counterfactually important”: swapping those heads’ activations between sentences changes whether a particular token is predicted (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL).

- Counterfactual Inputs and Activation Editing: Here, one generates or uses specially crafted inputs that differ in a targeted way, or directly edits internal representations, to probe causality. For example, to test if a neuron detects a concept “X”, we could find inputs that activate the neuron strongly (concept present) and others that are identical except without concept “X”, then see if the neuron’s activation (and model output) changes accordingly.

These intervention methods have been formalized in frameworks like Causal Mediation Analysis (CMA). Vig et al. (2020) applied CMA to BERT to quantify how much of a model’s bias or behavior is mediated by specific components ([2004.12265] Causal Mediation Analysis for Interpreting Neural NLP: The Case of Gender Bias). In their study of gender bias, they treated certain neurons and attention heads as mediators and measured how “masking” those mediators reduces bias in the output. They found bias was sparse (concentrated in a few components) and that some heads amplified or repressed bias effects (a sign of interactions among components) ([2004.12265] Causal Mediation Analysis for Interpreting Neural NLP: The Case of Gender Bias). This kind of analysis goes beyond “X is important” to dissect how information flows: e.g. determining that a particular neuron carries gender information that later influences a prediction ([2004.12265] Causal Mediation Analysis for Interpreting Neural NLP: The Case of Gender Bias).

Another example is the work by Geiger et al. (2021) on causal abstraction, which seeks to map the model’s internal computation to a human-understandable causal model. By intervening on groups of neurons and seeing if the model’s behavior can be described by a higher-level causal graph, they aim to simplify the network’s logic in interpretable terms.

Importantly, causal interventions aren’t limited to diagnosing models; they also guide editing them. If we identify a neuron that causally triggers an undesirable output, we might alter or regulate that neuron to prevent the behavior (an idea we revisit in AI Safety and Alignment). Overall, by actively probing “what if we change this part?”, researchers can move from correlation to causation – identifying not just which components correlate with a behavior, but which ones determine it (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL) ([2004.12265] Causal Mediation Analysis for Interpreting Neural NLP: The Case of Gender Bias). This offers a powerful path to truly understanding and controlling transformers.

Neuron-Level Interpretability

Transformers consist of thousands (or billions) of neurons across their layers. Neuron-level interpretability asks: can we understand the role of individual neurons (or small groups of neurons)? In early neural network research, especially on vision models, efforts like Network Dissection labeled neurons as detecting concepts like “dog” or “circle.” In transformers (for language), neurons can be more abstract, but researchers have found striking examples:

- Some neurons appear to track specific concepts or features. For instance, in GPT-2, researchers at OpenAI noticed a neuron that acted as a “sentiment neuron,” whose activation level correlated strongly with the positivity or negativity of the text. Similarly, there are neurons that seem to count parentheses or track whether a sentence is inside a quotation.

- Knowledge Neurons: A recent line of work investigates how factual knowledge (e.g. “Paris is the capital of France”) is stored in language models. Surprisingly, it was found that such facts can often be traced to a small number of neurons in the model’s feed-forward layers (Knowledge Neurons in Pretrained Transformers) (Knowledge Neurons in Pretrained Transformers). For example, Dai et al. (2022) introduced the concept of knowledge neurons (Knowledge Neurons in Pretrained Transformers). Using a technique called knowledge attribution, they measure each neuron’s influence on predicting a specific factual statement. They discovered that for a given fact (like a country’s capital), there are individual neurons in mid-layer MLPs whose activation is positively correlated with that fact being true (Knowledge Neurons in Pretrained Transformers) (Knowledge Neurons in Pretrained Transformers). By amplifying or suppressing those neurons, they could increase or decrease the model’s tendency to output the fact, effectively toggling the knowledge (Knowledge Neurons in Pretrained Transformers). This implies those neurons encode that piece of information in human-interpretable form.

- Neuron Naming and Clustering: Ongoing research attempts to label neurons by the type of feature they detect. One approach is to feed the model many inputs and see what triggers a given neuron, then use natural language to summarize a common theme. For instance, a neuron might fire for tokens related to numbers, or for polite speech. Work by GPT-4’s developers and others have used techniques like automated interpretation (searching for a string that maximally activates a neuron) to guess a neuron’s function.

Neuron-level analysis also benefits from causal methods. Instead of just correlating neuron activations with phenomena, researchers perform neuron ablation: set a neuron’s value to zero and see if a particular capability is lost. If zeroing out one neuron consistently causes the model to forget a fact or make a specific error, that’s strong evidence the neuron represented that information (Knowledge Neurons in Pretrained Transformers). For example, Meng et al. (2022) identified neurons in GPT models that, when ablated, specifically drop the model’s knowledge of certain names or events while leaving other outputs intact ([2202.05262] Locating and Editing Factual Associations in GPT).

A challenge in transformer interpretability is superposition: models often compress multiple concepts into one neuron due to limited dimensions, meaning a single neuron can fire for multiple unrelated concepts. This complicates the one-neuron-one-feature narrative. Research by Elhage et al. (2022) on superposition shows that many features are not perfectly separated in different neurons, but rather entangled. Despite this, there are still many cases of dominant neurons where one feature is clearly influential.

In summary, neuron-level interpretability in transformers is revealing that even in huge networks, individual units can carry surprisingly clean signals for specific functions or facts (Knowledge Neurons in Pretrained Transformers) (Knowledge Neurons in Pretrained Transformers). By identifying and manipulating such neurons, we gain fine-grained control and understanding – for instance, tweaking a single neuron to adjust a model’s sentiment or correct a specific false fact. This granularity complements broader interpretability methods and opens up avenues for targeted model editing and debugging at the neuron level.

Mechanistic Interpretability and Circuits

Moving beyond individual neurons, mechanistic interpretability aims to decipher the algorithmic structure of a model’s computation. In other words, can we reverse-engineer a transformer’s neural network into human-understandable “circuits” performing discrete sub-tasks? This field was pioneered by work like Olah et al.’s Circuits research in vision models and is now being applied to transformers.

A circuit in this context is a subnetwork (a subset of neurons and connections) that realizes some function or sub-task within the model (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL). Rather than treating the model as a black box, researchers try to break it into meaningful parts – for example, a circuit that performs grammar checking, or one that tracks entities in a story. Recent studies have made impressive progress:

- Reverse-Engineering GPT-2 for Indirect Object Identification (IOI): Wang et al. (2022) analyzed a GPT-2 model on the task of resolving ambiguous pronouns (indirect objects). They discovered a circuit of 28 attention heads (spread across several layers) that together implement the behavior “given a sentence like When Mary and John went to the store, John gave a drink to _, the model outputs ‘Mary’” (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL). This IOI circuit was categorized into roles: some heads identify all the names in the sentence, others suppress the second occurrence of a name, and “Name Mover” heads finally copy the correct name into the blank (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL) (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL). In effect, the researchers found that a complex linguistic task was handled by a coordinated group of attention heads acting in sequence – a computational graph that mirrors an algorithm a human might write for the same task. Crucially, they validated this by interventions: by patching or ablating those heads, they showed the circuit was necessary and (largely) sufficient for the behavior (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL) (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL).

- Induction Heads and In-Context Learning: Another notable finding (by Olsson et al., 2022) was the identification of induction heads – pairs of attention heads in GPT-style models that enable the model to continue a pattern seen earlier in the context. Essentially, one head looks for a token that has appeared before, and another head attends to the token that followed that first occurrence, thereby “inducing” repetition of a sequence. This small two-head circuit allows the model to do a kind of simple in-context learning (copying a sequence pattern) (In-context Learning and Induction Heads – Transformer Circuits Thread) (Induction heads in the GPT2-small model. (a) Several heads in…). Such a circuit is like a known algorithm (search and copy) implemented in the attention mechanism.

- Mathematical Frameworks: Researchers at Anthropic (Elhage et al., 2021) proposed a mathematical framework for breaking down transformer computations layer by layer. They view each layer as performing a set of linear transformations plus nonlinearities that either add or remove information in the residual stream (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers) (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers). In one experiment, they trained small transformers on simple algorithmic tasks and then successfully recovered the exact algorithm (e.g., binary addition) from the trained weights – a proof of concept that neural networks can sometimes be decoded into human-readable programs.

Mechanistic interpretability often involves iterative hypothesis testing: propose that a certain group of neurons/heads implements X, test it by interventions or visualization, refine the grouping, etc. Tools like Circuit Graphs and Automated Circuit Discovery are being developed to assist this process, by searching for minimal sub-networks that can replicate a model’s behavior on a task. For example, one method prunes away connections and heads not needed for a specific output, aiming to isolate a sparse circuit that still produces that output (Transformer Circuit Faithfulness Metrics Are Not Robust – arXiv) (Finding Transformer Circuits with Edge Pruning – arXiv).

A crucial aspect of this research is ensuring the explanations are rigorous. Wang et al. (IOI circuit paper) introduced criteria for circuit explanations: (1) Faithfulness – the circuit should produce the same output as the original model (for the task in question); (2) Completeness – it should include all components that significantly contribute; (3) Minimality – it should exclude components that aren’t necessary (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL). By enforcing these, they avoid pat explanations that sound plausible but omit important pieces.

Mechanistic interpretability is still a developing field, but its promise is immense: if we can fully reverse-engineer parts of a transformer, we essentially understand the model like a piece of code. This not only satisfies scientific curiosity, but also allows us to trust models more (we know what computations they perform) and to modify them in precise ways. Already, insights from circuit analysis have informed us which components handle which sub-tasks, enabling more targeted debugging and improvements. In combination with the other techniques (attribution, attention, etc.), circuits bring us closer to treating a neural network not as an inscrutable tangle of weights, but as a set of understandable algorithms learned from data (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL) (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL).

Probing and Layer Analysis

Probing is an approach to investigate what information is present in a model’s representations, typically at each layer. The idea is to train lightweight classifiers (probes) on the model’s internal activations to predict some property, without fine-tuning the model itself. If the probe succeeds, it suggests the model’s embeddings encode that property at that layer.

In the context of transformers, probing has revealed a layer-wise progression of linguistic knowledge:

- Basic features in early layers: The lowest layers of BERT and other models tend to encode very local information such as part-of-speech tags or whether a token is at the beginning of a sentence. For instance, a probe can easily read off POS tags from layer 1 or 2 of BERT (BERT Rediscovers the Classical NLP Pipeline).

- Syntactic relations in middle layers: Intermediate layers capture syntax and grammar. Probes have shown that constituents (phrases) and dependency relations are encoded in these layers (BERT Rediscovers the Classical NLP Pipeline) (BERT Rediscovers the Classical NLP Pipeline). For example, Tenney et al. (2019) found that around the middle layers, a probe could identify which words depend on which (subject-verb relations, etc.), indicating the model builds up a parse-like structure as you go up the stack.

- High-level semantics in upper layers: The top layers focus more on semantic features and task-specific information. By the final layers, the model’s representation is very task-tuned (e.g. for classification, the top-layer embedding is linearly separable by the class label). Probing studies observed that abstract relations (like coreference or semantic roles) emerge in higher layers (BERT Rediscovers the Classical NLP Pipeline). Tenney’s work famously described this as “BERT rediscovering the classical NLP pipeline” – first morphology and syntax, then semantics (BERT Rediscovers the Classical NLP Pipeline).

For example, one probing study showed that if you take BERT and try to predict the part-of-speech of each word from each layer’s activations, accuracy is highest at a low layer (around layer 2-3). For predicting, say, if two words are in a dependency relationship, performance peaks a bit higher (layer 4-5). And for something like coreference resolution (“does it refer to the animal?”), success comes in even higher layers (BERT Rediscovers the Classical NLP Pipeline). This layered emergence aligns with intuitive stages of language processing (BERT Rediscovers the Classical NLP Pipeline) (BERT Rediscovers the Classical NLP Pipeline).

Another approach to layer analysis is the Logit Lens (AKA “model lens”). This technique projects the intermediate representations directly into the model’s output vocabulary space by applying the output layer (the decoder matrix) at each layer (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers). Essentially, you ask: if the model had to make a prediction after layer L, what would it predict? This gives a series of “partial predictions” across layers. Researchers found that these partial outputs can be interpretable: for example, in a translation model, an early layer might already produce a rough translation of the first few words, while later layers refine it (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers) (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers). Tuned lenses are a refinement where they allow a small learned affine transformation before projecting to words, making the intermediate predictions more accurate (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers) (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers). Using such lenses, Merullo et al. (2023) identified distinct stages in GPT’s processing of a question: early layers predict broad topics, middle layers start forming the actual answer, and final layers handle detail words (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers) (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers). This aligns with the probe findings that information is added layer by layer.

Probing has also been used for non-linguistic properties: e.g., to see if a model’s layers encode world knowledge. Some studies trained probes on frozen language model embeddings to answer factual questions (yes/no) and found that knowledge is spread across many layers, but certain layers (often the feed-forward mid-layers) are especially crucial (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers) (this connects with the “knowledge neurons” idea that mid-layer MLPs store facts).

One important consideration is probe selectivity: a probe might successfully extract information, but was it truly encoded by the model for its own use, or is the probe finding a weak signal that the model never actually uses? This is debated in literature. Techniques like control tasks and minimum description length (MDL) probes have been proposed to ensure we measure meaningful encodings. Generally, if a simple linear classifier can quickly learn to predict a property from layer L, it’s a sign the representation at L linearly separates that property – likely the model’s training caused that separation because it was useful (BERT Rediscovers the Classical NLP Pipeline).

In summary, probing and layer-wise analysis present a compelling narrative: as you ascend a transformer’s layers, you can observe a transition from simple to complex features, mirroring human-designed pipelines (BERT Rediscovers the Classical NLP Pipeline) (BERT Rediscovers the Classical NLP Pipeline). This not only demystifies what each layer is doing, but also guides where to intervene or inspect for certain information. For instance, if we want to know whether a model has learned syntax, we know to examine the middle layers with syntactic probes. If we want to inject or edit factual knowledge, probing tells us the mid-to-upper layers’ feed-forward blocks are the right targets (as they hold semantics and facts). Thus, probing complements direct interpretability by mapping what is stored where inside the network.

Practical Applications

Fine-Tuning for Interpretability

While much of interpretability research focuses on analyzing fixed models, an emerging idea is to train or fine-tune models in ways that make them inherently more interpretable. The goal is to maintain performance while structuring the model or its training such that its workings are more transparent. Several strategies have been explored:

- Constraining Explanations (“Right for the Right Reasons”): One approach is to add an auxiliary loss that encourages the model’s own attributions or gradients to align with human expectations. For example, Ross et al. (2017) penalized a model if it relied on features it shouldn’t (as determined by an expert) ([1703.03717] Right for the Right Reasons: Training Differentiable Models by Constraining their Explanations) ([1703.03717] Right for the Right Reasons: Training Differentiable Models by Constraining their Explanations). In image classification, if a model predicting “bird” focuses on the sky instead of the bird, this loss would discourage that, forcing the model to adjust so that its gradient-based explanation points to the bird. Applying this to transformers, one could imagine penalizing a text model for attending to irrelevant tokens or encourage that certain known important tokens always have high saliency. By doing so, the model is trained to be explainable by design, yielding more faithful explanations. Ross et al. showed that models trained with such constraints not only offered more interpretable rationale (by aligning model explanations with human-provided ones) but also generalized better out-of-distribution ([1703.03717] Right for the Right Reasons: Training Differentiable Models by Constraining their Explanations), presumably because they learned the “right” features.

- Self-Explaining Models: Another line of research is developing architectures that produce human-readable explanations along with predictions. For instance, a transformer could be fine-tuned to output a rationale sentence before its final answer (this is related to chain-of-thought prompting, where the model generates its reasoning). If the rationale is enforced to be faithful, the model effectively explains itself. There have been NLP models (like those for multi-hop QA or commonsense reasoning) trained to generate an explanation paragraph that supports the answer. These can be seen as enhancing interpretability – though ensuring the explanation is an accurate reflection of the internal reasoning is a challenge (models can generate plausible-sounding explanations that are not actually why they arrived at an answer).

- Bottleneck Architectures and Probes during Training: One can modify the transformer architecture slightly to include “interpretable bottlenecks.” For example, inserting a layer that has to predict certain intermediate concepts (like facts or grammar tags) before proceeding – akin to forcing the model to explicitly compute those and making it easier to inspect. In vision, there are concept bottleneck models that first predict attributes then use them to predict the label; similarly, one could have a language model first produce some intermediate representation that’s constrained (like a set of labels or simplified text) then continue. Researchers have tried multi-task learning where one task is the original and another is an explanatory task, thus fine-tuning the transformer to not only perform well but also to make its internals align with the explanatory task.

- Sparse and Modular Fine-Tuning: We can also fine-tune models to be more modular – e.g. encourage sparsity in attention or activation so that only a few heads/neurons activate for a given function. By L1 regularization or entropy penalties on attention distributions, the resulting model might use more distinct, disentangled parts, which are easier to interpret. Some recent transformer modifications aim to create transparent attention (forcing each head to attend to only one thing in a nearly binary way) or modular MLP layers (each neuron or subset of neurons handles a separate sub-task). These constraints during fine-tuning can yield a model that is slightly less opaque.

One concrete example in the literature: researchers fine-tuned BERT on a sentiment classification task while providing it with human highlights of important words, essentially training it to have attention that mirrors those highlights. The resulting model not only was accurate but its attention weights could be directly used as explanations (since they were taught to align with human rationale).

The trade-off is often between performance and interpretability – adding such constraints can hurt accuracy if not done carefully. However, the payoff is a model that is much easier to troubleshoot. If it makes a wrong prediction, one can examine the intermediate explanations or activations to see where it went astray. Fine-tuning for interpretability is a young area, but it represents a proactive stance: rather than post-hoc trying to explain a complex model, why not train the model to be more explainable in the first place? This might involve minor sacrifices in the model’s flexibility but yields a model that stakeholders can trust and audit more readily.

AI Safety and Alignment

Interpretability isn’t just an academic exercise – it’s increasingly seen as a crucial tool for AI safety and alignment. As AI systems like large language models become more powerful, understanding their “thought process” helps ensure they behave in line with human values and do not produce harmful outputs. Here’s how interpretability intersects with safety:

- Detecting Misaligned Goals or Deceptive Behavior: One fear is that an advanced AI might “think” something internally (for example, consider a harmful action) but not surface it explicitly. If we have interpretability tools to read the model’s intermediate states, we could potentially catch these misaligned intentions. A 2023 study by Burns et al. introduced Contrast-Consistent Search to find latent knowledge in language models (Discovering Latent Knowledge in Language Models Without Supervision | OpenReview) (Discovering Latent Knowledge in Language Models Without Supervision | OpenReview). They managed to identify a direction in the model’s activation space that corresponds to the model’s true belief about a factual question – even if the model’s output was coerced into saying something else. In other words, they could often tell what the model really “knows” versus what it says (Discovering Latent Knowledge in Language Models Without Supervision | OpenReview) (Discovering Latent Knowledge in Language Models Without Supervision | OpenReview). This is directly relevant to alignment: if a model says “I don’t know how to make a bioweapon”, an interpretability method might reveal that internally it has the knowledge and is just avoiding saying it. With that information, humans can make informed decisions (e.g. do not deploy that model or add safeguards).

- Preventing Harmful Outputs: By monitoring activations in real-time, we might stop a model from generating disallowed content. For example, if certain neurons are known to fire when the model is about to produce hate speech or confidential information, those could trigger a safety off-switch. OpenAI and others have experimented with such “neural tripwires.” As a hypothetical, imagine a neuron that activates strongly for the concept of self-harm instructions. A safety system could watch the model’s forward pass, and if that neuron’s activation crosses a threshold, intervene (either by truncating the output or redirecting to a safe completion).

- Understanding Failure Modes: Interpretability helps diagnose why a model fails or behaves poorly, which is essential for alignment. If a model gives a biased or toxic response, tracing the attention or neuron activations might show it latched onto an inappropriate association learned from training data. Perhaps a certain circuit is biased – once identified, that circuit can be modified or retrained. This was demonstrated in work on gender bias, where identifying specific attention heads that carried gendered information allowed researchers to mitigate biased language generation by controlling those components ([2004.12265] Causal Mediation Analysis for Interpreting Neural NLP: The Case of Gender Bias).

- Aligned Fine-Tuning Targets: When doing fine-tuning for alignment (like RLHF – Reinforcement Learning from Human Feedback, used for models like ChatGPT), interpretability can highlight which model internals correlate with misbehavior. If during training the model receives a bad reward for a certain output, developers can see what internal activations led to it and explicitly adjust them. There is research into combining interpretability with RLHF so that the reward model doesn’t just look at the final output but also at whether the model considered a disallowed action internally.

- Verifying “Honesty” and Truthfulness: Aligning AI with truth and honesty is a challenge – models may give false answers either because they lack knowledge or because of prompt incentives. Interpretability methods have been used to check if a model knew the correct answer. In the Discovering Latent Knowledge paper (Discovering Latent Knowledge in Language Models Without Supervision | OpenReview), the authors could ask a yes/no question and, by reading internal states, determine the model’s likely true belief (yes or no) even if the model’s output was different due to a prompt. This kind of capability is a step toward building AI that are honest about their uncertainty or knowledge, because an aligned system could consult the model’s latent knowledge and override a bluffing or hallucinating answer.

The long-term vision in the alignment community is interpretability-enabled oversight: we might have AI assistants auditing other AI systems by inspecting their internals. For instance, one could train an “interpreter model” that takes in the hidden states of a large model and outputs a verdict like “Model is about to produce disallowed content” or “Model is following user instructions versus ignoring them.” There’s already work in creating simplified metamodels that approximate a complex model’s thinking in a human-readable form – a direction sometimes called “transparent chain-of-thought.”

In sum, interpretability provides the safety net and measurement tools for alignment. It turns the opaque mind of the model into a somewhat observable process, so we’re not flying blind when ensuring AI doesn’t go off the rails. Every unsafe outcome has precursors in the activations; interpretability is how we shine a light there. As we get better at it, we improve our ability to trust but verify AI systems. An aligned AI future likely hinges on making these black boxes transparent enough that we can confidently direct them towards beneficial behavior and away from harmful outcomes.

Modular Experimentation with Layers

Transformers are deep stacks of layers, and researchers are increasingly treating them as modular systems where parts can be swapped, re-used, or studied in isolation. The idea of layer-wise sub-model interpretability plays in here: each layer (or block) of a transformer can be seen as performing a sub-computation, so what if we experiment with those sub-computations directly? This has led to creative techniques:

- Layer Swapping and Remixing: Given two models or two different training snapshots of a model, one can try exchanging layers between them to see how it affects performance. Surprisingly, some transformer layers are somewhat interchangeable. For example, one study took two copies of GPT-2 trained on different data and swapped some middle layers – the resulting hybrid still functioned, implying those layers learned a modular function like “generic English syntax processing” that didn’t depend on the specifics of training data. This modularity suggests layers learn broadly applicable transformations (like normalization of syntax or aggregation of information) which can be transplanted.

- Early Exiting and Layer Removal: Researchers have tried truncating models – e.g. removing the last few layers – to see what the earlier layers by themselves can and cannot do. Early exit techniques allow a model to produce outputs from intermediate layers if a certain confidence is reached (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers). Analyses showed that sometimes a model can “make up its mind” by the second-to-last layer, and the final layer is just refining or increasing confidence (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers). This kind of experimentation informs us about which layers are truly necessary for which capabilities.

- Intervening at Specific Layers: We discussed activation patching in the theoretical section; modular experimentation is doing that in a more exploratory way. For instance, one can feed input A to the model up to layer N, and input B from layer N+1 onward (effectively splicing the computation at layer N). By doing this systematically, we learn how layer N’s output influences final results. This method was used to pinpoint where certain factual information is integrated in the model. If you take a prompt with a known fact and another prompt with a corrupted fact, and you swap their states at a certain layer, observing whether the correct or corrupted fact wins out tells you that layer’s role in factual recall.

- Knowledge Editing: A very direct modular intervention is editing model parameters in a specific layer to implant or alter knowledge. Recent techniques like ROME (Rank-One Model Editing) target the weights of a single mid-layer feed-forward network to insert a new fact (e.g. change the model so that “The capital of Italy is Venice” instead of Rome) ([2202.05262] Locating and Editing Factual Associations in GPT) ([2202.05262] Locating and Editing Factual Associations in GPT). ROME finds a rank-1 update to the weights at a particular layer that accomplishes this change. The success of ROME highlights that one layer often contains a localized representation of certain knowledge that can be modified without retraining the whole model ([2202.05262] Locating and Editing Factual Associations in GPT) ([2202.05262] Locating and Editing Factual Associations in GPT). Similarly, other methods (MEMIT, etc.) edit multiple layers in a small region. The ability to surgically modify layers and see a specific change in model behavior is strong evidence of modularity – it’s like changing one function in a large program.

- Rewinding and Replaying Layers: Another experiment is to take the outputs of layer N for some input and feed them into another model’s layer N+1 to see if it can continue processing meaningfully. If model B can pick up where model A left off (at some layer interface), it implies a kind of standardized protocol of information at that interface. Some research found that within the same model family, later layers can indeed process earlier layers’ representations even across different random initializations or training variations, suggesting a degree of learned communication protocol between layers.

All these experiments treat layers as Lego blocks that can be analyzed and manipulated. The benefit is twofold: (1) Understanding – by seeing what happens when we remove or alter a layer, we learn what it was doing. For example, if removing layer 5 causes the model to lose subject-verb agreement, we deduce layer 5 was ensuring grammatical agreement. (2) Controllability – if layers are modular, we can envision mixing and matching to create models with new properties without training from scratch, or isolating problematic behavior to a subset of layers and fixing it. It’s a very engineering approach to neural networks.

One concrete outcome of modular analysis: after identifying a problematic behavior in certain layers, one could retrain just those layers (freezing others) on a focused dataset to correct the issue. This is faster than full fine-tuning and minimizes side-effects. Another outcome is the possibility of ensemble of layers: for uncertain tasks, one could run two different versions of layer 10 (say, one more creative, one more factual) and have layer 11 choose between them.

In summary, treating transformer layers as modules has opened up new ways to experiment. It demystifies the stack of layers – rather than an indistinguishable mass of weights, we see a pipeline of transformations where we can poke at stage 1, tweak stage 2, etc. This modular view complements interpretability: after identifying what layers do via probes and circuits, we act on them via swaps, edits, or removals to test hypotheses and even improve the model.

Adjusting and Aligning Models

Finally, we consider how interpretability and transparency contribute to the adjustment and alignment of AI models to human needs. A transparent model is much easier to align – you can tell when it’s deviating and why. Several themes arise here:

- Trust and Verification: Transparent models allow users and developers to trust AI systems. When a model can explain its reasoning or when we can audit its internals, it’s easier to deploy in high-stakes areas (medical, legal, etc.). For example, a diagnostic transformer model might highlight the symptoms in a medical record that led to its conclusion. Doctors can verify those are valid reasons – if not, they know the model is misaligned with medical knowledge. This builds trust because the decision process is open for evaluation, not hidden.

- Alignment with Human Values: Often, misalignment happens because the model optimizes the wrong objective (e.g. maximizes user engagement at the cost of truth). Through interpretability, we can identify the internal signals that correspond to unethical or undesired outputs and then reduce the model’s reliance on them. For instance, if a summarization model is found to heavily use a “sensationalism neuron” to produce clickbait style summaries, we can adjust the training objective or explicitly regularize that neuron’s activity. Transparency makes value alignment feasible by pinpointing what to change within the model.

- Feedback and Iterative Improvement: In an aligned AI development process, one might train a model, interpret its behavior, and then adjust it in a loop. Each round, interpretability tools reveal a flaw or bias, and engineers address it by fine-tuning or architectural tweaks, then repeat. This is analogous to debugging software – you observe where the program’s logic fails and then go fix that part. Without interpretability, this process is guesswork; with interpretability, it becomes targeted and systematic.

- Controlling Model Behavior: A transparent model can be controlled more easily because we understand the knobs and dials inside it. For example, if we know which component in a dialogue agent produces humorous responses, we could amplify it for a playful chatbot or dampen it for a formal assistant. Alignment isn’t only about avoiding bad outcomes but also shaping the AI’s personality and style to match human preferences. At OpenAI and other labs, there’s research into steering models via interpreter models that sit on top of the original model’s activations and can modify them on the fly (a kind of real-time alignment).

- Safe Failures: Aligned models should fail gracefully or defer to humans when uncertain. Interpretability can provide uncertainty estimates – if the model’s internal representations are wildly inconsistent or if it’s oscillating between two decisions, that could signal low confidence. By monitoring such patterns, the system can decide to say “I’m not sure about that, let’s get a human’s input.” This aligns with the value of humility in AI systems, preventing confident but wrong answers.

One illustrative example is the use of interpretability in content filtering. Instead of just training a model to refuse certain requests (which can be brittle), researchers can find neurons that activate for sensitive content (like violence or hate) and then programmatically use those signals as an additional gate. This dual approach – both the model’s output and its internal state must be safe – is more reliable. If the model tries to cleverly phrase a disallowed content in a new way, the internal neuron might still fire, catching it even if keyword-based filters wouldn’t.

Moreover, as models become more complex, alignment may require model-of-a-model oversight. We might train separate smaller models explicitly to read the larger model’s mind and flag concerns. This is sometimes called an “AI auditor” – an AI that inspects another AI. For instance, a small network could be trained on many examples of a big language model’s thoughts (activations) labeled by whether a harmful outcome occurred, learning to predict from the big model’s thoughts if it’s about to say something harmful. This auditor could then intervene. Such schemes rely on the premise that the big model’s internals expose its tendencies in time for intervention – which is exactly what interpretability research strives to ensure.

In conclusion, improved transparency directly translates to more controllable and trustworthy AI. It turns alignment from an abstract aspiration into a concrete engineering discipline: identify the component corresponding to an unwanted behavior, adjust or retrain it, verify the change via interpretability, and iterate. This synergy between understanding and controlling is why interpretability is often touted as one of the cornerstones of AI safety. By making the “black box” a bit more like a “glass box,” we empower developers and stakeholders to shape AI systems that not only perform well, but do so in ways that are compatible with our values and expectations.

References: The content above integrates findings and methods from a range of interpretability research, including but not limited to Sundararajan et al. (2017) (DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers), Lundberg & Lee (2017), Jain & Wallace (2019) (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL), Abnar & Zuidema (2020) ([2005.00928] Quantifying Attention Flow in Transformers), Vig et al. (2020) ([2004.12265] Causal Mediation Analysis for Interpreting Neural NLP: The Case of Gender Bias), Tenney et al. (2019) (BERT Rediscovers the Classical NLP Pipeline), Clark et al. (2019), Dai et al. (2022) (Knowledge Neurons in Pretrained Transformers), Wang et al. (2022) (INTERPRETABILITY IN THE WILD: A CIRCUIT FOR INDIRECT OBJECT IDENTIFICATION IN GPT-2 SMALL), and Burns et al. (2023) (Discovering Latent Knowledge in Language Models Without Supervision | OpenReview), among others. These works demonstrate the evolving landscape of transformer interpretability and its practical significance in aligning AI systems with human needs and values.