Using JSON to Structure GPT Prompts: A Personal Guide

Ever since I started experimenting with prompt engineering for GPT-based models, I’ve been on a quest for consistency. I remember the first time I asked ChatGPT for a structured output and got a beautifully formatted JSON snippet back – I was hooked. In this post, I want to share how using JSON formatting in prompts became my secret weapon for getting reliable, easy-to-parse responses from GPT models like ChatGPT or GPT-4. I’ll explain why JSON works so well with these models (spoiler: the AI has seen a lot of JSON), and how you can use JSON in system messages and user inputs to simulate schemas, organize instructions, and make your prompts ultra-clear. I’ll even throw in examples and practical use cases (from blog content generation to ad variations) that I’ve refined through practice. So grab a coffee, and let’s dive into the world of JSON-structured prompts!

Why JSON Works So Well with GPT Models

When I first started using JSON in my prompts, I had a hunch it would work, but I was blown away by how well it works. There are a few reasons for this success:

- Training Familiarity: GPT models have likely seen tons of JSON during training. They’re trained on vast internet data, and a surprising amount of the web is actually structured data. (One analysis noted that about 46% of web pages contain JSON-LD snippets – a form of JSON for linked data – which means GPT has been exposed to an enormous amount of JSON structure in its diet!). Developers have also been feeding GPT examples of JSON in prompts for a while, and newer models have even been fine-tuned on following JSON schemas. All this exposure means when GPT sees

{...}with keys and values, it’s on familiar ground. The model knows “Oh, this looks like JSON, I know how this works.” - Structural Alignment: JSON’s format is clear and unambiguous. It’s like giving the model a form or a template to fill out. Each key in a JSON object labels a piece of information, so GPT doesn’t have to guess what you mean – it’s explicitly spelled out. This structure is “like a guiding hand that steers GPT-4 towards an improved understanding of the user’s requirements”. In my experience, when I provide a JSON structure, the model aligns its output to match that structure, making the responses much more predictable. The curly braces, quotes, and colons guide the AI’s flow of thought, keeping it within the lines, so to speak.

- Preference for Structured Output: Because I often build applications or automations on top of GPT, I want answers I can parse programmatically. It turns out GPT is happy to oblige with JSON when asked correctly. Others have noted this too – they prefer JSON-formatted responses for software integration rather than free-form text. JSON is a lingua franca between humans and machines, and GPT seems to get that. When the AI outputs structured JSON, it’s immediately ready for use in code, no messy string parsing required. In short, JSON plays to GPT’s strengths (pattern completion and following examples) while also making my life easier as a developer.

- Clarity and Conciseness: Using JSON forces me to be concise and specific in prompts. I’m literally defining the fields I want. This clarity reduces ambiguity for the model. In fact, OpenAI themselves observed that before they introduced new structured output features, “developers [were] working around the limitations of LLMs… via prompting… to ensure outputs match the formats needed” – JSON being a prime example of such a format. A well-structured JSON prompt is like handing the model a checklist of exactly what’s needed. Less room for interpretation means more consistent results.

All these factors make JSON a sort of sweet spot for GPT interactions. The model is comfortable with it due to training exposure, it aligns with the model’s strength in pattern following, and it yields outputs that are immediately useful. It’s a win-win.

How to Use JSON in Your Prompts (and Why It Helps)

Okay, so JSON is great in theory – but how do we actually use it in practice when prompting GPT? Let me walk you through how I approach it, from setting up the conversation context (system message) to formatting the user input, all using JSON.

Structuring the System Message with a JSON Schema

One of my favorite tricks is to start the conversation with a system message that contains a JSON “schema” or template of what I want. Essentially, I describe the output format or the important fields in JSON form. This sets the stage for the AI.

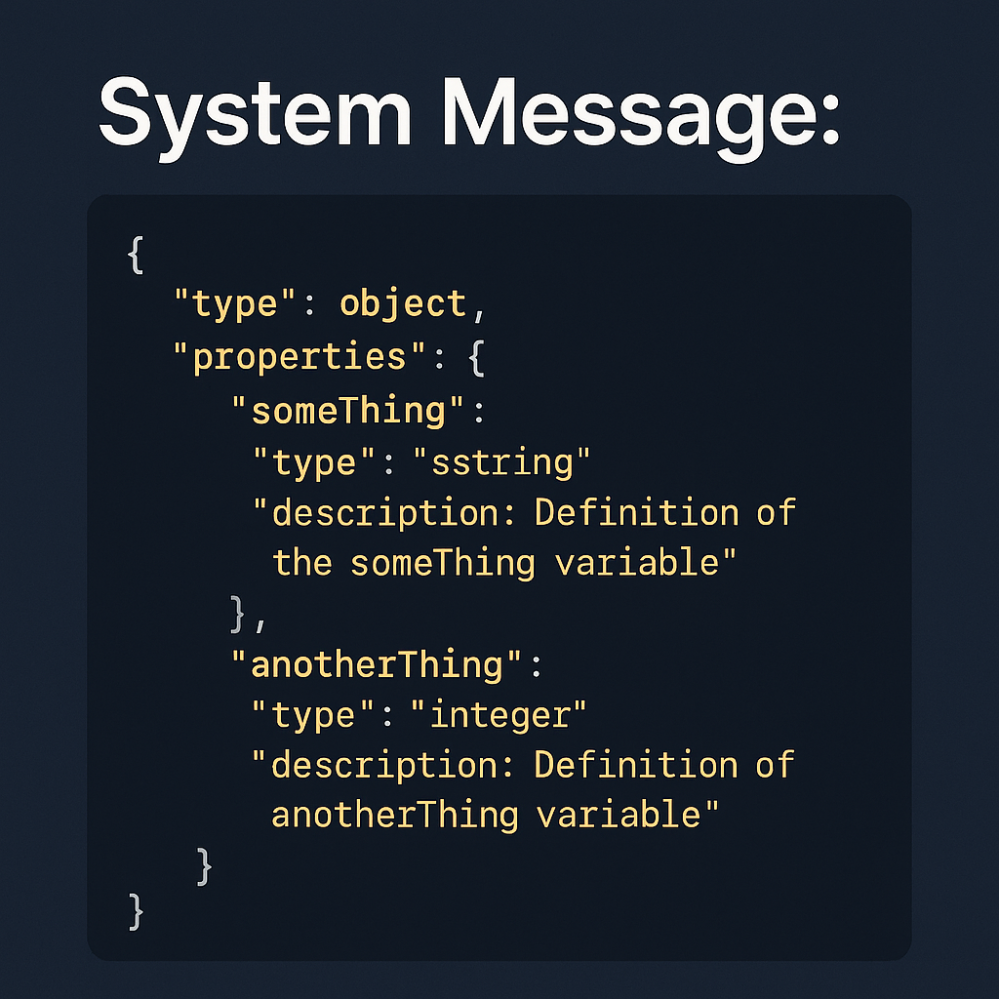

For example, if I want the assistant to output an object with specific fields, I might include a system message like this:

{

"type": "object",

"properties": {

"someThing": {

"type": "string",

"description": "Definition of the someThing variable"

},

"anotherThing": {

"type": "integer",

"description": "Definition of the anotherThing variable"

}

}

}

What am I doing here? I’m basically simulating a JSON schema for the response I expect. I specify that the output should be an object with a "someThing" field (string) and an "anotherThing" field (integer), and I even describe what they are. This JSON schema isn’t being “enforced” by any programmatic means (it’s all just text to GPT), but the magic is that GPT will interpret it as instructions. In my experience, the model will usually adhere closely to this structure in its response.

Why use a JSON schema-like format in the system message? A few reasons:

- It clearly defines the roles and data we expect. There’s no confusion about what

"someThing"or"anotherThing"refer to – we’ve documented it. - It’s easy for the model to copy the structure. Large Language Models are extremely good at mimicking patterns – if you show a JSON skeleton, the model will fill it in. As one guide succinctly put it, “Models are very good at copying structure—give it something to copy.”

- It reduces the chance the model will drift into unwanted formats. I often add a note like “Respond with a JSON object following the above schema and nothing else.” This pairs the structural example with an explicit instruction. (GPT models are normally very chatty in plain English by default, so you sometimes have to gently rein them in. A simple “valid JSON only, no extra text” reminder does wonders, since these models are “trained to respond conversationally by default” and you’re nudging it toward a more formal output.)

This approach of a JSON-based system message is something I stumbled upon through trial and error, but it maps closely to OpenAI’s own developments. If you’ve heard of OpenAI’s function calling feature, it works in a similar way: you define a function with a JSON schema for parameters, and the model will return a JSON object fitting that schema. They essentially gave the model a built-in ability to fill in a JSON template. What we’re doing with a manual JSON prompt is the DIY version of that – and it’s surprisingly effective! (In fact, GPT-4 and newer were partly tuned for this, which “enhances reliability and programmatic integration” of structured outputs.) So, don’t hesitate to play schema-designer in your system prompt; the AI actually likes having that clear structure.

Formatting User Inputs as JSON Objects

Beyond the system message, I also often format my actual query or data as JSON when I send it to the model. This might feel a bit unnatural at first – we’re used to asking questions in plain English – but formatting the user message as JSON can dramatically improve how well the model understands what you want.

For instance, instead of asking in prose: “Give me analysis on X with perspective Y for audience Z”, I might send something like:

{

"topic": "X",

"perspective": "Y",

"audience": "Z"

}

This is a simple key-value structure (a JSON object) containing the information relevant to my request. By doing this, I’m effectively labeling each part of my input. The model doesn’t have to infer that "topic" corresponds to what I want it to write about, or that "audience" is the target readership – I’ve made it explicit. It’s akin to filling out a form and handing it to the AI.

Let’s use the earlier schema example in a concrete way. Say my system message defined the schema for someThing and anotherThing. Now my user prompt could be:

{

"someThing": "value",

"anotherThing": 123

}

By providing this JSON, I’m giving the AI two pieces of data: "someThing" is "value" and "anotherThing" is 123. The assistant can then take this structured input and do whatever task I’ve set for it (perhaps combine them, transform them, or use them in some content). The key point is that JSON user inputs ensure clarity. There’s zero ambiguity – no pronouns or fuzzy references. Every value is tied to a key that explains what it is.

In my personal experience, when I feed inputs this way, the model’s responses are much more on-point. If I have a typo or slight format issue in the JSON, the model even sometimes guesses what I meant (or complains about a JSON parsing error, which is a clue I need to fix my prompt!). More often, though, the JSON input just sails through and the model responds in a structured manner.

Tip: This technique shines in multi-turn conversations too. I’ve had cases where the assistant’s previous answer is a JSON, and I can say “Update the previous JSON by changing X to 10 and adding a new field Y.” The model will then output the modified JSON correctly. It’s like we establish a contract of communicating in JSON, and the model diligently follows it. This aligns with what I’ve seen others do: for example, one developer demonstrated creating a JSON object, giving it a name, and then updating it through natural language instructions, with ChatGPT faithfully modifying the JSON each time. Once JSON is the mode of communication, the model sticks to it, which is wonderful for keeping interactions consistent.

Balancing Rigid Structure with Natural Instruction

Now, you might wonder: Do I always write everything in JSON? Not always. I often use a mix – the JSON to structure key details, and a bit of natural language to give the overall instruction. For example, a prompt could look like:

You are a helpful assistant. Using the data below, generate a short report.

Data:{"sales": 25000, "growth": "5%", "region": "EMEA"}

Here, I wrapped the data in a JSON object, but my high-level command “generate a short report” was in plain English. This combo works well: the JSON provides precise data, and the natural language provides the task context. GPT can easily incorporate both.

The main caution is to keep the prompt clear and not overly convoluted. If you bury the JSON in a heap of narrative instructions, it might get lost. I try to keep the JSON visually separated (e.g., on a new line or as a formatted block, like in the chat UI or with triple backticks if needed) so the model clearly sees “ah, here is a JSON blob of data”. Clarity is king. In fact, clarity is so important that one prompting guide specifically advises to “avoid vague instructions or extra requirements that could lead to inconsistencies”. In practice, I’ve found that simpler prompts with a clean JSON section outperform elaborate, wordy prompts that might confuse the model.

To summarize this section, here are some best practices I follow when using JSON in prompts:

- Be explicit about JSON usage: I literally say something like “The response should be in JSON format” or “Here is a JSON with the input data”. Don’t assume the model will output JSON without being prompted – set that expectation clearly (e.g., “Respond with valid JSON only, no extra commentary.”). This helps shift the model from chatty mode to structured mode.

- Provide a schema or example: As shown, giving a mini JSON schema or an example JSON output in your prompt is like showing the model a blueprint. The model will follow it. If I want a list of objects, I might include an example list with one object. If I want specific fields, I show them. Think of it as priming the model with “copy this style!”.

- Keep it simple: Don’t mix too many formats or tasks. A prompt that says “Give me JSON, also do XYZ fancy thing, and by the way ensure humor and 3 jokes” can confuse the model’s focus. I break complex tasks into simpler structured steps when possible. Straightforward JSON structures paired with straightforward instructions yield the most reliable results.

- Use system messages if available: If you’re using the OpenAI API or a tool that allows system roles, use that to your advantage. I often set the system message to something like:

You are an AI that outputs answers in JSON format. You strictly output JSON with the structure given, and nothing else.This upfront role definition can reduce the chances of the model drifting back into natural language explanations. In the ChatGPT web UI you don’t have an explicit system box (unless you have Developer Mode or use plugins), but you can simulate it by just starting your conversation with a similar instruction. - Be ready to handle slight errors: Despite our best efforts, sometimes the model might slip an extra sentence or format something incorrectly (maybe a missing quote or an extra comma). It’s much rarer when you’ve given a clear JSON template, but it can happen, especially with very complex schemas. In critical applications, I always validate the JSON output with a parser. If it fails, I either manually edit the model’s response or ask the model to fix it. Often a gentle nudge like “Oops, that JSON had an error, please correct it to valid JSON.” will get the model to correct itself. Also, I’ve noticed setting a lower temperature (making the model less “creative”) tends to keep it on the straight and narrow format-wise. These are just safety nets – 95% of the time, if I’ve structured the prompt well, the JSON comes out fine.

Examples of JSON-Powered Prompting in Action

Let’s look at a couple of quick examples from my own usage to see how JSON formatting helps:

- Content Template Filling: I often generate structured content like blog sections or product descriptions. I’ll provide a JSON template such as:

{"title": "<blog title>",

"intro": "<introduction paragraph>",

"sections": [

{

"heading": "<section 1 heading>",

"body": "<section 1 content>"

},

{

"heading": "<section 2 heading>",

"body": "<section 2 content>"

}

],

"conclusion": "<conclusion paragraph>"}

In my prompt, I’ll ask the model to “Fill out this JSON template for the given topic.” Because the model sees the keys liketitle,intro,sections, etc., it will dutifully produce an output with all those parts populated. The result? A nicely organized blog post draft in JSON form. I can then easily extract each part or even render it into a document format. This beats getting one big blob of text and then trying to split it into sections manually. - Persona-Based Responses: For marketing and persona-driven writing, JSON prompts shine as well. Suppose I want the AI to respond in a certain style or perspective – I can encode that in JSON:

{

"persona": "Enthusiastic Fitness Coach",

"audience": "People who are new to working out",

"topic": "Benefits of a morning exercise routine",

"tone": "encouraging and friendly"}

Then I prompt: “Using the details above, write a 200-word social media post.” The model will understand that it needs to adopt the persona (voice) of an enthusiastic fitness coach, keep the audience in mind, stick to the topic given, and maintain an encouraging tone. All these variables are explicitly provided in the JSON, so the model is less likely to ignore one. It’s amazing to see the consistency: I can swap out"persona"or"topic"values in the JSON and run the prompt again, and I get a new post targeted appropriately. This consistency and reusability is exactly why JSON prompts are powerful – you can save that JSON structure and reuse it for dozens of different persona/content combos, ensuring a uniform style each time (a huge plus for content marketing teams maintaining a brand voice, for example). - Ad and Copy Variations: When I need multiple versions of ad copy or marketing messages, I sometimes ask the model for output in JSON too, not just input. For example, I’ll prompt: “Generate 3 variations of a one-sentence ad tagline for the product, in JSON format as an array of objects with keys ‘tagline’ and ‘angle’.” The model then might return:

[

{

"tagline": "Boost Your Productivity with XYZ!",

"angle": "focus on efficiency"

},

{

"tagline": "XYZ – Your secret weapon for saving time.",

"angle": "emphasize time-saving"

},

{

"tagline": "Experience the future of productivity with XYZ.",

"angle": "futuristic appeal"

}]

Now I have a JSON array of variations, each with a note about the angle or approach. This is super useful because I can easily loop through this array in code or hand it off to a colleague who can see the structure behind each suggestion. It also underscores how JSON formatting encourages me to think in terms of structured creativity – even creative outputs like taglines can be organized by angle, tone, length, etc., by leveraging JSON fields to label those aspects.

In all these examples, JSON is doing two things: helping me communicate clearly to the model what I want, and helping the model communicate back to me in a format I can work with easily. It removes a lot of guesswork on both sides.

Benefits for Automation and Teamwork

I want to highlight something that became apparent as I adopted JSON prompting: it’s not just about the model’s understanding, it’s also about making my workflow smoother, especially as a developer/content marketer who often works with teams.

- Easy Automation: Because prompts and responses are in JSON, it’s trivial to script things. I’ve written Python scripts to generate prompt JSON from spreadsheets of data (for example, a list of product specs to feed into a product description template), and then feed those to GPT. The outputs come back as JSON which I can directly parse (

json.loads()in Python is my friend). This means I can automate content generation pipelines end-to-end. No more regex-ing a giant text blob hoping to extract the right pieces – the JSON keys are right there. As one article noted, receiving structured outputs like JSON saves time and makes downstream processing seamless – I feel this every day now. If you’re doing growth hacking or content at scale, this is a huge win. - Consistency and Reuse: JSON prompts are easy to tweak and reuse. I keep a collection of JSON prompt templates (for different tasks like summaries, blog outlines, Q&A, etc.). When I need a new variation, I just change a value or two. The underlying structure remains the same, giving consistent style and fields in the output. This reusability means faster development of new prompts and less chance of error. It’s like having a set of fill-in-the-blank forms for the AI. In team settings, I’ve shared these JSON templates with colleagues. Even if someone is not super technical, they can fill in the blanks in a JSON snippet for their needs. The structured format “offers a common ground for users to share and edit… maintaining a unified approach to AI interactions”. I’ve seen a non-developer marketer colleague successfully use a JSON prompt I gave them, just by replacing the text values – they didn’t need to understand all the AI lingo, the JSON labels guided them on what to input where.

- Reduced Miscommunication: When collaborating on prompt design, using JSON forces everyone to agree on what the fields and requirements are. It’s much less open to interpretation than a paragraph of instructions. If we decide that every response should have, say,

{"intro": ..., "mainPoints": [...], "callToAction": ...}, then everyone knows what that means. This makes teamwork on AI content projects more efficient – a content strategist can specify the desired structure in JSON, and a developer can implement it, and the AI will adhere to it. No lost details in translation. - Adapting to Future Tools: Interestingly, the manual JSON prompting approach prepared me for more advanced features. When OpenAI rolled out function calling and tools that expect JSON, I was already thinking in JSON terms. The transition was natural. And even if you’re just sticking to plain GPT usage, you’re kind of future-proofing your prompts – structured prompting is likely here to stay, because it aligns so well with how AIs operate. One could say JSON is a lingua franca between humans instructing AI and the AI delivering data back.

A Note on OpenAI’s Function Calling (and How JSON Prompting Relates)

I’ve hinted at OpenAI’s function calling and structured output features a couple times, but let me clarify for those unfamiliar: function calling is a feature where you can define a schema (using JSON Schema format) for a “function” and ask GPT to give you a JSON object as output that matches that schema. It’s like a formal version of what we’ve been doing manually. When you use function calling via the API, the model is constrained to produce JSON that exactly fits the specified keys/types, or it will error out. Recently, OpenAI even introduced a mode where the model guarantees to follow the schema strictly (they call it structured outputs, using something they dubbed “JSON mode”).

Why do I bring this up? Because it validates the whole approach of JSON prompting. The fact that the AI researchers are building official features around JSON formatting means that we’re not hacking the model in a weird way – we’re actually playing to its strengths. Our manual JSON-structured prompts are essentially a DIY version of function calling. The difference is just that function calling is more robust (the model was specifically tuned for it and will less often make mistakes in the JSON). But even if you’re not using the API or these advanced features, you can still reap similar benefits by structuring your prompts with JSON formatting. I often tell fellow prompt designers: look at the function calling documentation for inspiration on how to structure your manual prompts. You’ll notice it’s all about defining properties, types, and descriptions – exactly what we can do in a system message or prompt as we saw earlier.

So, if you hear about “function calling” but you’re working in ChatGPT’s UI or a context where you can’t use it directly, don’t worry. Just know that JSON prompting is a tried-and-true method to get more consistent outputs. It’s basically what the pros are doing under the hood.

Final Thoughts: My Personal Takeaway

Switching to JSON-formatted prompts has been a game-changer for me. At first, it felt a bit like talking to the AI in a robotic way, but now I see it as speaking more clearly to the AI. When I use JSON in prompts, I imagine I’m giving GPT a neatly organized TODO list – and GPT, being the eager assistant it is, checks off each item and hands me back a nicely wrapped result.

In an informal sense, I’ve found JSON prompts to reduce the “surprises” I get from the model. There’s nothing more satisfying than seeing the model’s output drop directly into my app without errors, or having it follow a content outline to the letter. It’s gotten to the point where if a prompt I write is giving me trouble, I step back and ask: “Can I JSON-ify this?”. Nine times out of ten, breaking the task down into a JSON structure fixes the issue.

For anyone (developer, marketer, or AI enthusiast) reading this and thinking of trying it: go for it. You don’t need to overhaul all your prompts overnight. Start small – maybe take a task you often do and wrap the input or output requirements in JSON, as an experiment. You’ll likely be pleasantly surprised at how the model responds. And if it doesn’t work perfectly the first time, tweak the schema or provide a short example. The process of refining JSON prompts is itself pretty fun – it feels like collaborating with the AI to design the format.

In a world where AI models can sometimes be unpredictable, using JSON formatting in your prompts is a way to bring a bit of order to the chaos. It has certainly made my interactions with GPT-4 more reliable, clear, and efficient. Plus, it appeals to the developer in me who loves structured data and the marketer in me who loves consistent messaging – truly the best of both worlds!

So that’s my story of how I discovered and fell in love with JSON-structured prompts. Hopefully, these tips and insights help you on your own journey to better AI conversations. Give it a try – your future self (and any code processing the AI’s output) will thank you for it. Happy prompting!

Sources:

- Bob Main, “ChatGPT and JSON Responses: Prompting & Modifying Code-Friendly Objects.” – Medium, Aug 15, 2023.

- Chris Pietschmann, “How to Write AI Prompts That Output Valid JSON Data.” – Build5Nines, Apr 8, 2025.

- Ben Houston, “Building an Agentic Coder from Scratch.” – Personal Blog, Mar 20, 2025.

- OpenAI, “Introducing Structured Outputs in the API.” – OpenAI News, Aug 6, 2024.

- {Structured} Prompt Blog – “Four Benefits of Using JSON Prompts with GPT-4.”